Building Vibe Coder: A Journey into Private, Agentic AI Coding

I’m excited to share the story behind my latest project, Vibe Coder, a command-line coding assistant powered by local large language models (LLMs). You can check it out on GitHub at https://github.com/Legorobotdude/PrivateCode and visit the project website at https://www.vibecoder.gg/. This post dives into why I built Vibe Coder, the thought process that shaped it, the technical nuts and bolts, and why running it locally matters—especially when compared to tools like Claude or Cursor. Plus, I’ll explore how agentic AI takes this project to the next level and what that means for the future of coding.

The Spark: Privacy Meets Productivity

The idea for Vibe Coder started with a simple frustration: I wanted an AI coding assistant that didn’t ship my code off to some distant server. As a developer, my projects—whether personal experiments or client work—are often sensitive. Sending them to cloud-based services like Claude (from Anthropic) or Cursor (the AI-powered IDE) felt like handing over my digital diary to a stranger. Sure, these tools are powerful, but their reliance on cloud infrastructure comes with a privacy trade-off. I needed something that stayed on my machine, respected my data, and still delivered the coding superpowers I’d come to expect from modern AI.

That’s when I stumbled upon Ollama, a platform for running LLMs locally. It was a lightbulb moment: what if I could harness the power of models like LLaMA or CodeLLaMA right on my laptop, no internet required? From there, Vibe Coder was born—a tool that combines the convenience of an AI assistant with the security of local execution. But I didn’t want it to just answer questions; I wanted it to act—to write code, edit files, and run commands autonomously. That’s where the concept of agentic AI came in, and it’s been a game-changer.

The Thought Process: Designing for Developers

Building Vibe Coder wasn’t just about slapping together some Python scripts—it was about creating something practical and intuitive for developers like me. Here’s how I approached it:

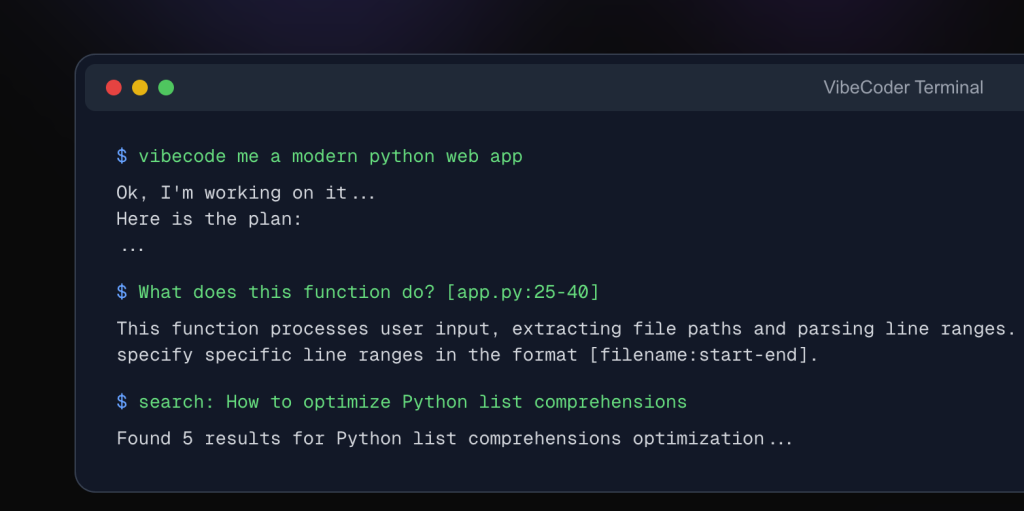

- Command-Line Focus: I chose a command-line interface because it’s where I spend most of my time as a coder. No bloated GUI, just a lean, terminal-based tool that fits seamlessly into my workflow. Whether I’m debugging a script or sketching out a new project, Vibe Coder is right there in my shell.

- Privacy First: By leveraging Ollama’s local LLMs, I ensured that no code or queries ever leave my machine. This was non-negotiable. Unlike Claude or Cursor, which process your input on their servers, Vibe Coder keeps everything in-house. It’s like having a personal coding assistant who never gossips.

- Agentic Capabilities: I didn’t want a passive Q&A bot. I wanted Vibe Coder to do things—create files, edit code, execute commands, and even plan multi-step tasks. This agentic approach means it’s not just a helper; it’s a collaborator that takes initiative based on my instructions.

- Flexibility: Developers work on all kinds of projects, so Vibe Coder had to be versatile. It supports web searches (via DuckDuckGo), file operations, command execution, and model switching (e.g., from a general-purpose LLM to a code-specific one). I also added features like line-specific file reading (e.g., [file.py:10-20]) to make it precise and powerful.

- User Control: With great power comes great responsibility. I built in safety checks—confirmation prompts for file changes and command runs, and a whitelist for safe commands—to prevent accidental chaos. The goal was empowerment, not recklessness.

The result? A tool that feels like a trusted pair programmer, running entirely on my hardware, ready to tackle whatever I throw at it.

Technical Details: How Vibe Coder Works

Let’s pop the hood on Vibe Coder. It’s written in Python (check the source at GitHub), and here’s how it’s put together:

- Core Engine: Vibe Coder uses Ollama’s API (running at http://localhost:11434) to interface with local LLMs. I set up a default model (currently “qwq,” but you can swap it out), and the script handles API calls with requests. The get_ollama_response function is the heart of the AI interaction, sending conversation history and retrieving answers.

- Command Parsing: The CLI uses prefix-based commands like edit:, run:, create:, and search:. For example, edit: [main.py] Add error handling triggers the handle_edit_query function, which reads the file, sends it to the LLM with the instruction, and applies the changes after user confirmation. Regular expressions extract file paths and URLs from brackets (e.g., [file.py] or [https://example.com]).

- Agentic Features: The plan: command is where the agentic magic happens. Inspired by autonomous AI agents, it breaks down high-level requests (e.g., plan: Build a Python script to fetch weather data) into executable steps—creating files, writing code, and running commands. The LLM returns a JSON array of steps, which Vibe Coder executes with user approval. This uses handle_plan_query to orchestrate the process.

- File Handling: Functions like read_file_content and write_file_content manage file I/O, with robust encoding detection via chardet and line-range support. The generate_colored_diff function (powered by difflib and colorama) shows changes in a colorful, readable format.

- Safety: Command execution (execute_command) uses subprocess with a safety check (is_safe_command) to whitelist allowed commands (e.g., python, git) and block dangerous ones (e.g., rm). Everything runs in a configurable working directory to keep operations sandboxed.

- Thinking Blocks: The LLM’s <think> blocks are processed by process_thinking_blocks, which I’ve tuned to truncate verbose output and prevent overflow—a challenge I tackled after some trial and error.

The tech stack is lightweight but powerful, leaning on libraries like requests, beautifulsoup4 for web scraping, and colorama for terminal flair. It’s all open-source, so you can fork it and tweak it to your heart’s content!

Privacy: Local LLMs vs. Claude/Cursor

One of Vibe Coder’s biggest selling points is privacy, and it’s worth digging into why that matters. Tools like Claude and Cursor are fantastic—don’t get me wrong. They’re polished, fast, and backed by cutting-edge models. But they’re cloud-based, which means every line of code you feed them gets sent to their servers. That’s fine for open-source hobby projects, but what about proprietary work? Trade secrets? Personal scripts? Suddenly, you’re trusting a third party with your intellectual property.

With Vibe Coder, privacy is baked in. By running Ollama locally, all processing happens on your machine. No data leaves your control. This has trade-offs—local LLMs require decent hardware (think 16GB+ RAM for larger models) and setup time (pulling models like CodeLLaMA)—but the payoff is huge. You’re not at the mercy of a company’s data policies, network outages, or potential breaches. For me, that peace of mind is worth the extra horsepower.

Compare that to Claude or Cursor: their convenience comes with a catch. Your code might be used to train future models (depending on their terms), and while they promise security, breaches happen. Vibe Coder sidesteps all that. It’s your AI, your rules.

Agentic AI: The Future of Coding

What really excites me about Vibe Coder is its agentic AI. Unlike traditional assistants that just spit out answers, Vibe Coder acts on your behalf. The plan: feature is a taste of what’s possible: you give it a goal, and it figures out the steps—creating files, writing code, running tests—all while keeping you in the loop with confirmations. It’s like having a junior developer who never sleeps.

This opens up wild possibilities:

- Project Bootstrapping: Say plan: Set up a Flask web app with a database, and watch it scaffold the files, install dependencies, and launch a server.

- Debugging Automation: Ask it to “fix errors in [app.py] and test it,” and it edits the code, runs it, and checks the output.

- Learning Tool: Newbies could use it to see how complex tasks break down into actionable steps, learning by doing.

Agentic AI shifts coding from a solo grind to a collaborative dance with an intelligent partner. It’s not about replacing developers—it’s about amplifying us. Imagine integrating Vibe Coder with Git for version control, or adding voice commands for hands-free coding. The sky’s the limit, and I’m just scratching the surface.

What’s Next for Vibe Coder?

Vibe Coder’s already a solid tool (try it out at https://www.vibecoder.gg/), but I’ve got big plans. I’m working on:

- Better Performance: Optimizing for larger responses and faster file handling.

- More Commands: Like test: for running unit tests or commit: for Git integration.

- Community Input: It’s on GitHub (https://github.com/Legorobotdude/PrivateCode), so I’d love your feedback and contributions!

Building Vibe Coder has been a blast—a mix of problem-solving, experimentation, and a dash of stubbornness to keep it local. It’s proof that privacy and power can coexist, and that agentic AI is more than hype—it’s the future. So, grab the code, fire up Ollama, and let me know what you think. Let’s vibe code together!